Handout for Tilgjengelige Apps fra Design til Bruk

Creating apps that use the accessible technology isn’t that difficult, makes your app reach an even larger audience and “is the right thing to do.” This document is a supplement to what people will see in the tutorial today[1].

Definitions

Let’s take a quick look at some definitions

Universal Design

Universal design is a term that is used more in the Norway and the United States than it is in Europe (e-inclusion is a more popular term there). The original definition from comes from Ron Mace:

Universal design is the design of products and environments to be usable by all people, to the greatest extent possible, without the need for adaptation or specialized design.

The Center for Universal Design at North Carolina State University has created seven principles for Universal Design:

- Equitable Use

- Flexibility in use

- Simple and intuitive

- Perceptible information

- Tolerance for error

- Low physical effort

- Size and space for approach and use.

Universal design can refer to both a method and an outcome. Using universal design principles during the design and creation of a product (including having people with disabilities testing the product) should result in a universally-designed product.

Accessibility

There are some varied definitions about accessibility. One popular definition is the idea that making something accessible means that you create something that is usable for people with disabilities. Another definition is to create something that is usable by the most people possible. It’s very subtle, but the second definition encompasses a much larger group. We all could use accessibility features depending on the situation, one can even look at the recent announcement of Siri for the iPhone 4S as a way of making the phone more accessible for hands and eyes free operation.

This also means that you can create alternate ways of accessing something. For example, an HTML document tagged with proper headlines, having alternate text for pictures, tables marked correctly with headers, etc. will be accessible to people who have screen reading software, since they can read it. It doesn’t mean the web page is universally designed (for example the CSS could render the entire page unreadable to people with sight, or the it might be in a language that your audience doesn’t understand).

Laws and Rules

A quick run through of the information concerning accessibility and the law. Specifically, we’ll look at Section 508, the Riga Declaration, and Norway’s Discrimination and accessibility Law.

Section 508

This is probably one of the more well known laws about accessibility. Section 508 refers to an amendment to the U.S.’s Rehabilitation Act of 1973. Section 508 is to ensure that all information technology purchased by the U.S. Federal Government be accessible. It has gone through several revisions to make things clearer, while one can get around this with a technicality (e.g., by saying that no other technology exists for this at the moment), all technology that will receive funding from the Federal Government must eventually be accessible. There are also a set of Section 508 standards to help ensure compliance. These include:

- Software applications and operating systems

- Web-based intranet and Internet information and applications

- Telecommunication Products

- Videos or Multimedio Products

- Self-contained, closed products

- Desktop and Portable Computers

These standards may be helpful in checking accessibility on your own products.

Riga Declaration

The Riga Declaration was a declaration by the EU and EFTA/EEA to commit to creating an “inclusive and barrier-free” information society. Signed in 2006, it encouraged countries to bring more people on the Internet, ensure all public Web sites are accessible, and enact recommendations on practices for accessibility requirements. It also required that all countries enact laws that would make it required all new ICT be accessible.

Discrimination and Accessibility Law

The Norwegian Lov om forbud mot diskriminering på grunn av neddsatt funksjonsevne, more commonly knows as Diskriminerings- og tilgjengelighetsloven or DTL, is Norway’s answer to the Riga declaration. Section 9 of the law lays out the requirement that public operations must ensure that things are universally designed within the activity. This includes ICT. Private enterprises that are targeted at the greater public, must follow these rules as well. So, unless you are targeting a special, private group, your new ICT must be universally designed.

Section 11 of the law describes what recommendations will be used for checking that something is universally designed. The delivery of this has been delayed until early 2013, but this does not mean that you can ignore universal design. For example, the Equality and Anti-discrimination ombud (Likestillings- og diskrimineringsombudet) earlier this year pointed out that one online bank’s login method was not universally designed and in violation of the DTL.

Talkback and VoiceOver

Here are some quick instructions on enabling both Talkback and VoiceOver on devices.

Talkback

All of this was tried with the Nexus S. Different versions of Android may require other methods to work. As we will see, the Nexus S is probably not the best phone for doing accessibility work on Android.

Basics

Android accessibility is based on traditional idea that working with apps on Android is similar to using an application on the desktop with only a keyboard. That is, you navigate around the app using some sort of arrow key navigation (for example, using a trackball or D-Pad on the phone). By default, you do not interact directly with the touchscreen elements like you do with VoiceOver. This does not mean that you cannot use the touchscreen. Many apps do use the touchscreen for gestures to control them.

Which Phone?

According to Android Accessibility, they recommend a phone that has both some way of navigating the screen (for example, a trackball or keypad) and a physical keyboard. They do not recommend a touchscreen-only phone, though you can go pretty far with a touchscreen-only phone like the Nexus S—assuming you are sighted. See below for more information.

The phone should also be running a version of Android that is greater than 1.6. Obviously, having a later version of the Android makes accessibility work better. The current version that is available for phones is 2.3 (Gingerbread), though later versions for the phone will certainly be available.

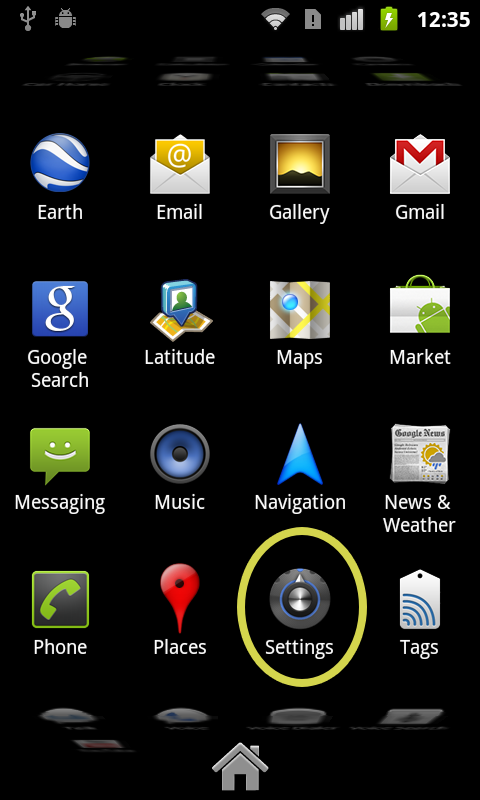

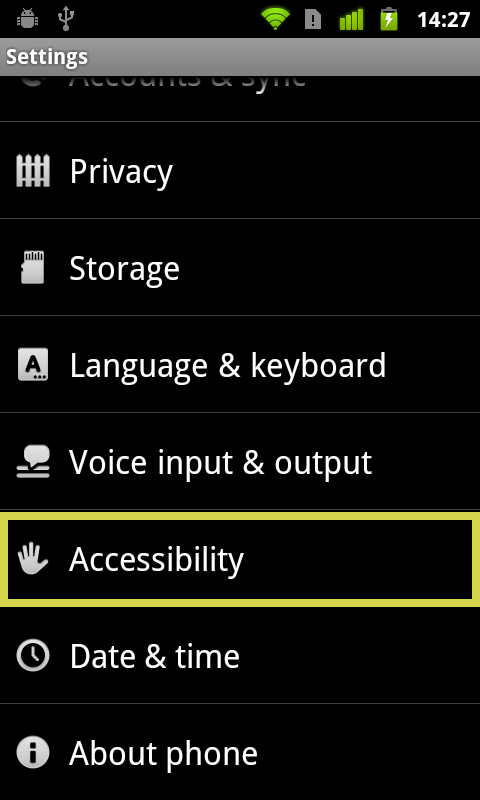

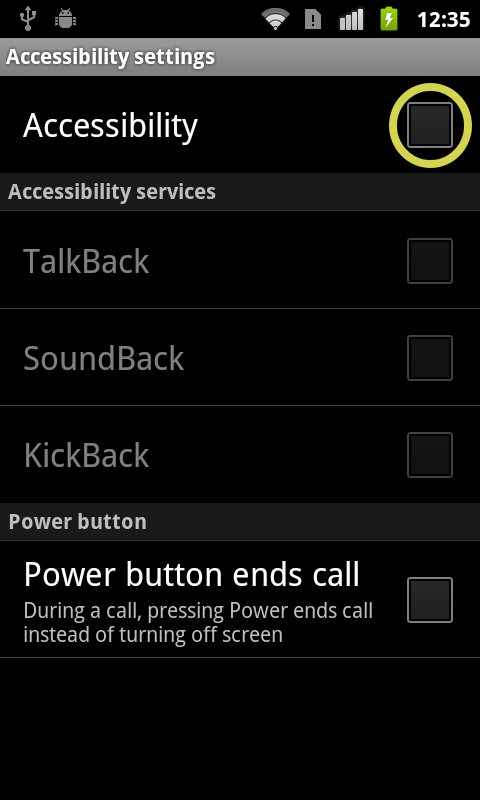

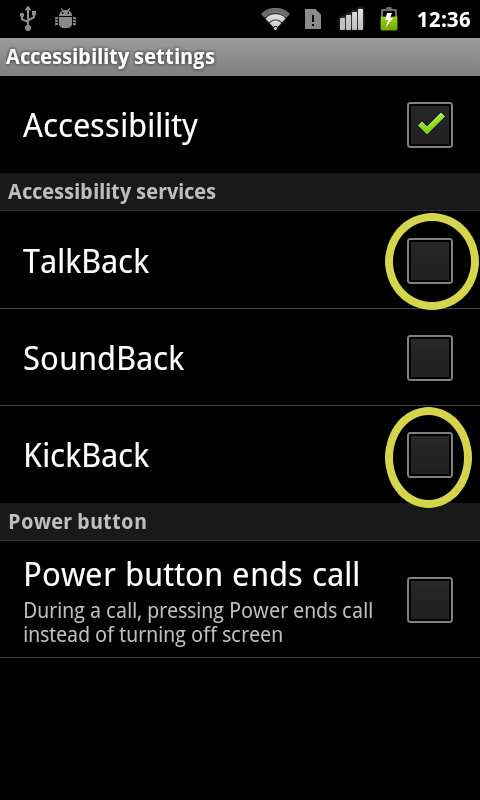

Enabling Accessibility

Here are the step-by-step instructions on how you can enable accessibility on Android. Specifically, we will look at how to do this with the Nexus S. These instructions assume that you are able to see and manipulate the screen. Sadly, you cannot enable accessibility if you cannot use the phone without it.

- Ensure you have installed the latest version of TalkBack installed from the Android Marketplace (Especially if you are using the Nexus S).

- Go to Settings.

- Navigate to Accessibility.

- Enable Accessibility

- Enable TalkBack (and optionally KickBack).

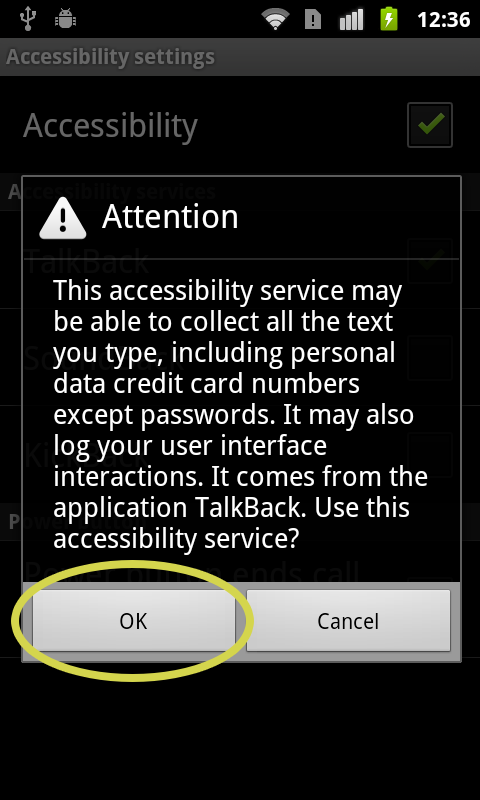

- Accept the security implications.

- Go to the Home Screen.

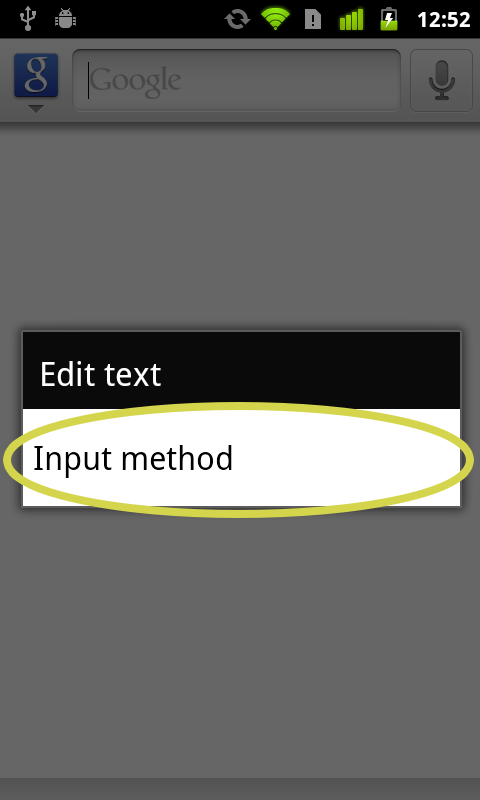

- Go to some place that allows you to select input methods. The easiest is to just focus on the Google search bar.

- Once you have a keyboard up, tap and hold on the input screen to bring up the context menu.

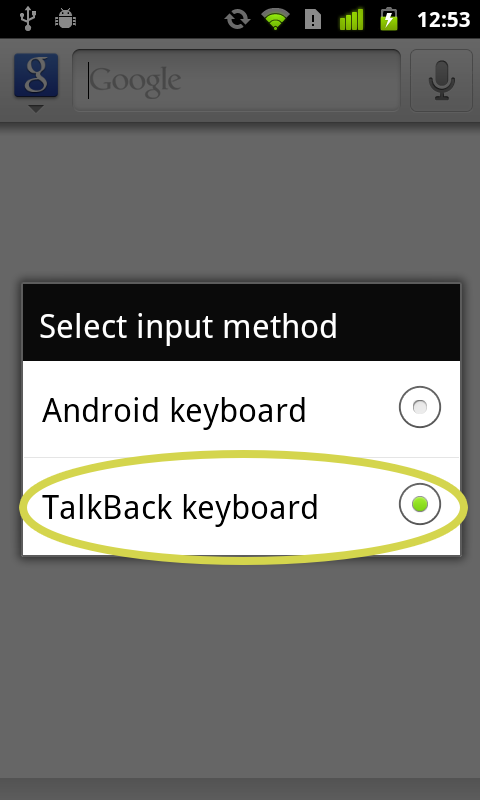

- Select the TalkBack keyboard.

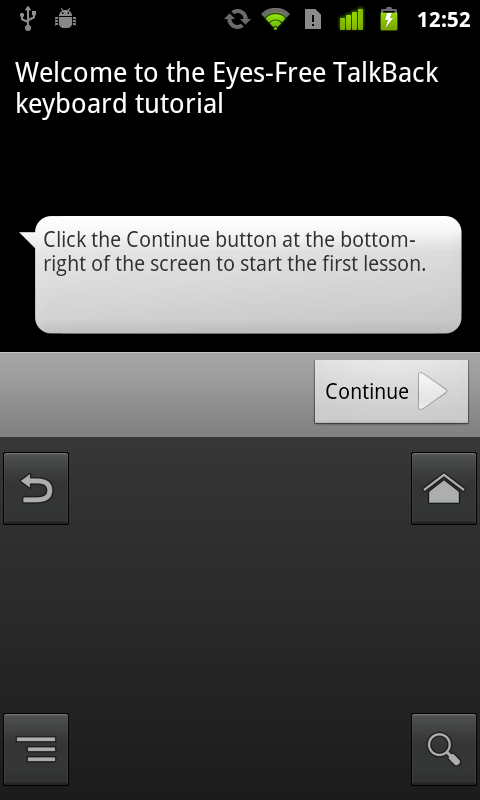

- This will ask if you want to run the TalkBack tutorial, if this the first time you've used this input method, it is recommended to use the tutorial.

There are several voices that are shipped with the Nexus S (English, French, German, Italian, and Spanish). If you install SVOX Classic from the Android Marketplace, you can then install several other voices (including Norwegian).

The Nexus S works for many things, but there are several things that do not work correctly with the current TalkBack keyboard. These include:

- Menus (both context and those from the “menu” button).

- Alerts (They are read just find, but you cannot navigate between the two buttons).

- Notifications (Talk back will read the notifications, but it will not allow you to clear them or act on them).

All of these things require you to “use your eyes” on the Nexus S (and likely other touchscreen phones for the moment).

Missing pieces of the puzzle.

Currently WebViews and MapViews are not really accessibility. There are a couple of “accessible” web browsers that use alternate ways of navigation, but when you start to use a web view in your application, you are essentially stuck. This has been identified by Google and will be fixed in a future release.

VoiceOver

Since the iPhone 3GS in 2009, Apple has included a screen reader called VoiceOver on its phones. VoiceOver is standard on all iPhones now. There is no additional software to buy and one only needs to activate the VoiceOver to use it. This can be done on the phone itself, or when it is connected to a Mac or PC through iTunes (which is accessible through screen readers). Another bonus, VoiceOver on the iPhone includes voices for 21 languages (Norwegian included).

VoiceOver uses gestures to interact with the phone. The typical interaction involves tapping on the screen to select an item and then double tapping to activate that item. Other gestures help with navigation. For example, you can flick in the direction you want with three fingers to change pages (up or down, left or right). Flicking up with two fingers will read all the items on the screen or you can drag your finger across the screen to get an idea of various elements on the screen. There is also a Rotor Control that is typically used for the web browser to “dial in” the level of granularity you want to access the web browser at (for example, links–only, form elements, or headings–only). Excluding the rotor control, there are 19 key gestures that you need to know about. Including the two rotor dial left and dial right, that’s 21 gestures. Though in most circumstances, you use only a few of them.

It takes a little bit of time to get used to this different way of interacting with the iPhone, but one can pick up the most commonly used gestures quickly and began to use the applications. Apple has ensured that the applications that ship with the iPhone are accessible and provides a developer API for making applications on the AppStore accessible as well. This will be expanded on below.

A small note about phones running other operating systems

Neither Windows Phone nor Symbian have any real accessibility features built-in at the moment. Symbian does have software that works for some applications, but this is done through backdoors that are not available to normal application writers. Microsoft has admitted that it has “been incompetent on this” and Windows Phone 7 includes no accessibility software (though 7.5 “Mango” has some speech recognition features and other small accessibility features, but nothing that would help someone write an accessible application).

While one can hope that this eventually will be fixed, it seems for the time being that one cannot write a program that will be accessible on these platforms.

Development

Some quick notes for the code session. It’s probably better to take a look at the source code. The broad strokes are included below.

Android Development

Most of the work with development involves two things, which are covered in the Android Documentation. These boil down to:

- Ensure that the content description property is set for views that need it.

- posting proper accessibility events from your custom views.

iPhone Development

Most of the details about making the What’s On application accessible is detailed in a previous project.

User Testing

I assume that the majority of the people attending this tutorial know a bit about how to run a user test, so I won’t cover too much there. I will talk a little bit about shot it requires for including people with disabilities.

Some organizations may even be able to help you run user tests with people with disabilities (feel free to contact me for more information).

Picking up recruits

Probably the best place to recruit people with disabilities is to take contact with the user organizations that exist for each group. These include:

- Blindeforbundet

- Dysleksi Norge

- Døvforbundet

- Handicapforbundet

A key point to realize is that the people that are recruited for the user tests will be volunteers, so it’s a good idea to give them some sort of compensation. Though this is rather standard practice for commercial user tests. A good way to do that is to use a gift card. Especially for people that have vision disabilities.

Reporting

Another point to keep in mind is that a person’s disability is sensitive personal information personopplysninger and this means that you must report it to the Datatilsynet. If this is a isolated activity, you only have to fill out a report report. If you are doing this more often, you may wish to obtain a license for handling personal information. Another option is to look at getting a privacy ombudsman.

References

Here is a list of the references from my last slide of the talk.

- Android: Designing for Accessibility

- Google’s guide for Android developers about accessibility.

- Accessibility Programming Guide for iOS

- Apple’s guide for iOS developers about accessibility.

- What’s On source code

- The source code to both the iOS and Android version of the apps, includes both the before and after versions.

- Making an Application Accessible on the iPhone 3GS

- Documenting my work on the making the What’s On app accessible on the iOS 3.

- Android Accessibility

- An independent web site documenting accessible applications for Android.

- The Eyes-Free Project from Google

- The home for Google’s accessibility project. Lots of good information about accessibility for Android phones.

-

Sorry this is in English, I didn’t have time to translate my thoughts.

↩