Research

General research interests

- Natural language processing;

- Discourse and dialogue modeling ;

- Spoken dialogue systems ;

- Privacy-preserving methods in NLP and AI ;

- Neural machine translation ;

- Planning, reasoning and learning under uncertainty;

- Social and cognitive robotics ;

- Machine learning & statistical modelling.

Dialogue Systems

Spoken language is a natural form of communication for us humans. Since childhood, we have all learned how to understand and produce speech in order to interact with one another, and much of our waking life is spent in social interactions mediated through language. In many ways, the human brain is "wired" for spoken dialogue. But how can we develop artificial agents (such as virtual assistants like Siri or Alexa, or social robots) that can truly communicate with human users via languages such as English, French or Norwegian?

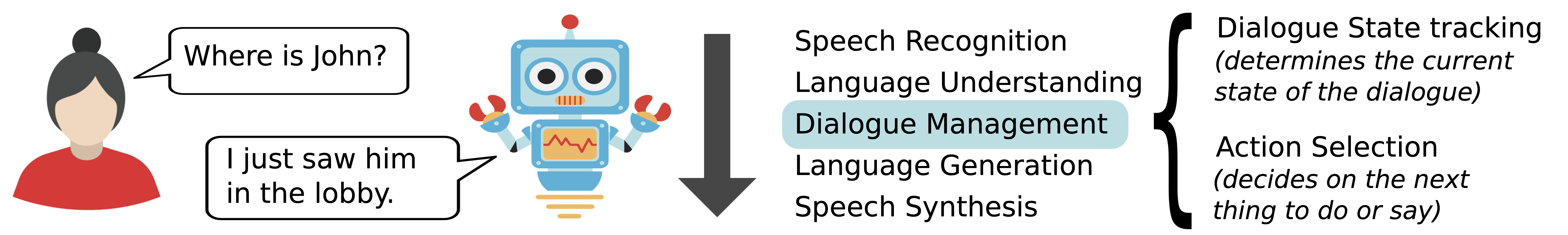

I am interested in how to develop dialogue systems that can scale up to rich conversations. My research more specifically focus on dialogue management, which is the part of dialogue systems that is responsible for making decisions on what to say or do at a given time. Current dialogue managers are often built with machine learning methods (such as deep neural networks) trained on dialogue data. However, current models are difficult to apply to rich conversational context that evolve over time. They also require large amounts of dialogue data to learn useful behaviours.

In our latest project, GraphDial, we investigate an alternative approach to dialogue management based on graphs as core representation for the dialogue state. Graphs are well suited to encore rich interactions that include multiple entities (such as places, persons, objects, tasks or utterances) and their relations. We also look at the use of weak supervision for dialogue management. Weak supervision is an emerging AI paradigm designed to provide machine learning models with indirect training data extracted from heuristics or domain knowledge.

In my PhD work, I developed a hybrid approach to dialogue management which combines rich linguistic knowledge (pragmatic rules, models of dialogue structure) with probabilistic models for planning and learning under uncertainty into a single, unified framework. This work culminated in the release of the OpenDial toolkit that allows system developers to construct dialogue systems with a new framework based on probabilistic rules. The approach has been validated in several human-robot interaction experiments, using a Nao robot as platform.

In my PhD work, I developed a hybrid approach to dialogue management which combines rich linguistic knowledge (pragmatic rules, models of dialogue structure) with probabilistic models for planning and learning under uncertainty into a single, unified framework. This work culminated in the release of the OpenDial toolkit that allows system developers to construct dialogue systems with a new framework based on probabilistic rules. The approach has been validated in several human-robot interaction experiments, using a Nao robot as platform.

Text Anonymisation

Privacy is a fundamental human right (Art. 12 of the Universal Declaration of Human Rights) and is protected by multiple national and international legal frameworks such as the General Data Protection Regulation (GDPR) newly introduced in Europe. This right to privacy imposes constraints on the usage and distribution of data including personal information, such as emails, court cases or electronic patient records. In particular, personal data cannot be distributed to third parties (or even used for secondary purposes) without legal ground, such as the explicit consent of the individuals to whom the data refers.

As such data is often highly valuable (in particular for scientific research), one solution is to rely on anonymisation techniques to protect the privacy of registered individuals. Unfortunately, current anonymisation methods do not work for unstructured formats such as text documents. As a consequence, text anonymisation is still mostly done manually. This process, however, is very costly, prone to human errors and inconsistencies, and difficult to scale to large numbers of texts. This is problematic, as text documents constitute a large part of most data sources (for instance, patient records are mostly made of texts).

In our current CLEANUP project, we work to address this technological gap and develop new machine learning models to automatically anonymise text documents. We also look at new methods to evaluate the quality of text anonymisation techniques and connect these metrics to legal requirements. Finally, the project investigates how these technical solutions can be integrated into organisational processes, in particular how to perform quality control and adapt the anonymisation to the specific needs of the data owner.

In our current CLEANUP project, we work to address this technological gap and develop new machine learning models to automatically anonymise text documents. We also look at new methods to evaluate the quality of text anonymisation techniques and connect these metrics to legal requirements. Finally, the project investigates how these technical solutions can be integrated into organisational processes, in particular how to perform quality control and adapt the anonymisation to the specific needs of the data owner.

Machine Translation

In my postdoctoral research work, I investigated how to improve machine translation technology in conversational domains. Machine translation, known by the general public through applications such as Google Translate, is the automatic translation from one language to another through a computer algorithm - for instance, translating from Japanese to Norwegian or vice-versa. Albeit great progress has been made over the last decade (mainly due to the development of robust statistical techniques), machine translation technology remains often poor at adapting its translations to the relevant context. In order to translate a dialogue (say, film subtitles from English to Norwegian), current translation systems typically operate one utterance at a time and ignore the global coherence and structure of the conversation.

My postdoctoral project aimed to make machine translation systems more “context-aware”. The project will develop new translation methods that can dynamically adapt their outputs according to the surrounding dialogue context. More specifically, the project tried to demonstrate how to automatically extract contextual factors from dialogues and integrate these factors into a state-of-the-art statistical machine translation system. The main goal of the project is to show that this context-rich approach is able to produce translations of a higher quality than standard methods. In particular, the project will examine how these new translation methods can be practically employed to produce high-quality translations of film subtitles. Although the project will only conduct experiments with a limited set of languages (such as English-Norwegian), the translation techniques developed through the project are meant to be language-independent and could in principle be applied to any language pair. In the longer term, speech-to-speech interpretation (the task of automatically translating speech from one language to another, in real-time) is another possible application of the project.